Hackathon: Optimizing AI-driven workflows within a mission-critical cyber-physical system

Damien Gratadour (CNRS – Observatoire de Paris) Bartomeu Pou Mulet (Barcelona Supercomputing Center)

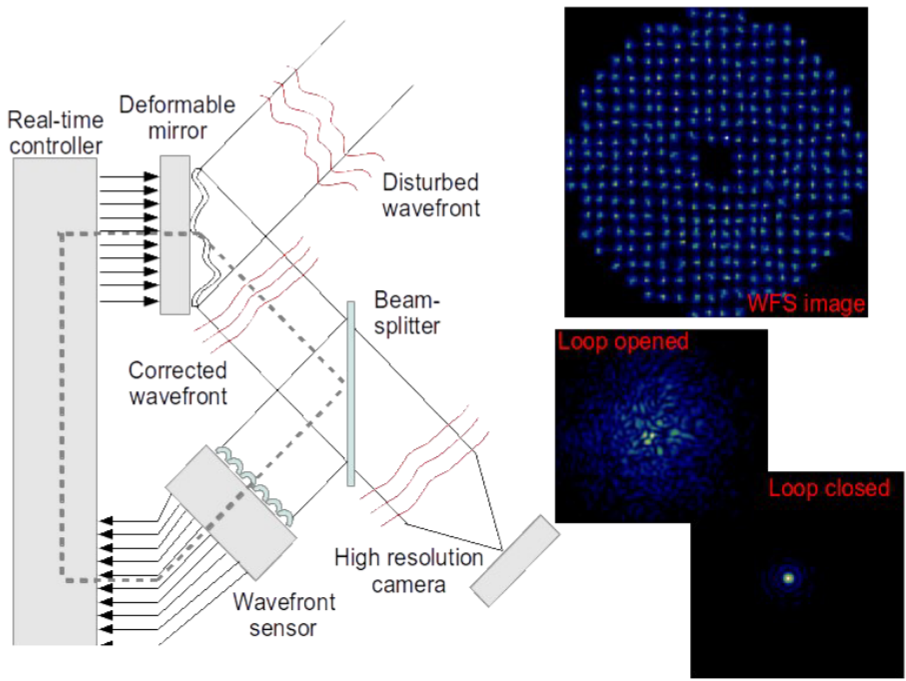

The largest ground-based telescopes will soon reach ~40m diameter and provide the angular resolution and collecting area to perform the required measurements to detect fainter exoplanets. But to reach the required contrast, they must overcome optical distortion induced by atmospheric turbulence. In order to compensate for such distortion and eventually maximize achievable contrast, Adaptive Optics (AO) technologies were developed for astronomy starting in the 1990s, and is now essential for the current largest optical telescopes. In an AO system, a wavefront sensor (WFS) is used to measure the atmospheric distortion at a high frame rate, which is then compensated with a deformable mirror (DM). The sub-system linking those two components, responsible for interpreting wavefront measurements into actual commands to actuators for the DM, is the real-time controller (RTC). This sub-system, that can be seen as a mission-critical cyber-physical system, must operate at high speed (~ kHz rate) to catch up with rapidly changing optical turbulence of the atmosphere. Indeed, it requires to ingest raw data streaming from sensors, to be processed through batched-centroiding and matrix-vector multiplies, to provide several millions of commands per second to the deformable optics.

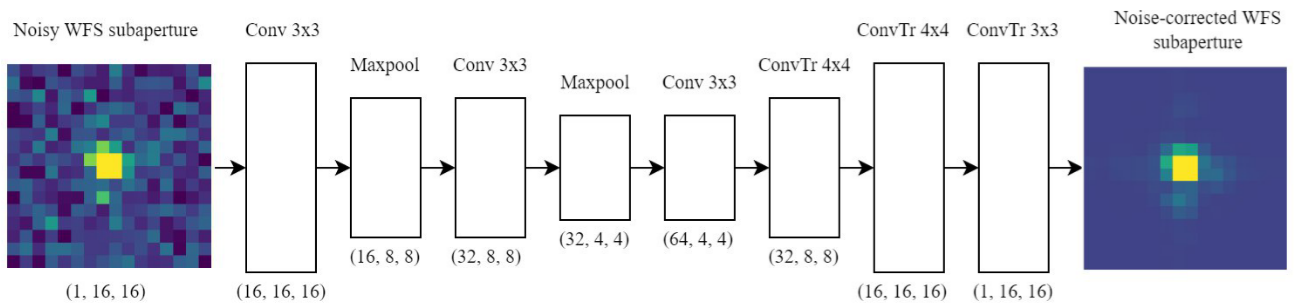

Our team has proposed to use current deep learning techniques to pre-process WFS images for denoising purposes. For that, an architecture similar to the denoising autoencoder is used, which learns a map from noisy input to noise-free output. The proposed architecture consists of convolutional layers together with maxpool operations (current state-of-the-art in image processing tasks). While the initial work on the denoising autoencoder and convolutional autoencoder consisted on learning more robust representations in the hidden layers and use those as a initialisation point for other networks in tasks such as image classification, it can be applied for denoising purposes.

In this hackathon, we propose to enhance this denoising module, as part of a comprehensive AO RTC pipeline, along several directions:

- Workflow modeling: denoising images is part of a more complex pipeline. The goal here would be to derive a comprehensive model of the pipeline and find the best trade-offs to meet time-to-solution specifications

- Towards a more frugal approach: images from WFS cameras are encoded in UINT16 and one could build a lightweight implementation of the autoencoder module, leveraging reduced arithmetic precision.

- Performance: the current code is targeting individual sub-apertures using the same model across the pupil, but sub-apertures on the edge receive less signal. One could use several models depending on the sub-aperture location

- Portability: an interesting prospect is to study several compute architectures to run the denoising inference module on, beyond GPUs and evaluate what performance can be achieved.

- Productivity: the denoising module needs to be configured by selecting the appropriate model, depending on the noise level. One could derive automatized way to optimize execution configuration by detecting noise level within the control loop and either select the appropriate model or build a universal model agnostic to the actual noise level.

This hackathon will rely on the use of COMPASS (COMputing Platform of Adaptive opticS System), an end-to- end simulation tool for AO system, allowing the users to generate synthetic but realistic AO loop data as if they were operating an actual AO system.

A comprehensive software stack has been designed to provide at the same time, both high performance computing, brought by core massively parallel algorithms running on GPU and ease of use, brought by a user interface based on python and a graphics toolkit. Main features include:

- On-line multiple layers Kolmogorov turbulence generation at various altitudes

- Multi-directional raytracing through turbulence

- Multi-directional WFS spots computation

- Various control strategies and full pipelines realizing the typical AO RTC sub-system

- Realistic piezostack deformable mirror model

The autoencoder module is already part of this software bundle, including a python interface to create and train new models and an inference module relying on optimized C++ code. The code will be made available to the hackathon participants for them to experiment with AO systems, evaluate performance and tweak the autoencoder module

Duration: full-day

Level: intermediate

Reason for attending

- Actively contribute to current R&D for the largest astronomical telescopes, aiming to evidence new exoplanets and habitable worlds

- Learn more on applying AI / DNNs to real world use cases

- Fave fun with advanced technologies for simulating complex cyber-physical systems

Presenter

Damien Gratadour, Senior Research Scientist at Observatoire de Paris, CNRS.

Damien Gratadour, Senior Research Scientist at Observatoire de Paris, CNRS.

Damien holds a PhD in Observational Astronomy from Université Paris-Diderot (2005). He has been an

Adaptive Optics (AO) fellow, responsible for the last stages of commissioning of the Altair AO

system on the Gemini North Telescope in Hawaii (2006) ; and an Instrument Scientist (2007-2008), for

GeMS, the Gemini MCAO System, a facility featuring 6 Laser guide stars.

Since 2008, at Observatoire de Paris - PSL, Damien has been leading an original research program on

high performance numerical techniques for astronomy including modeling, signal processing and

instrumentation for large telescopes. He has been the P.I. of several large programs at national and

European levels targeting AO Real-Time Controllers for giant optical telescopes with emerging

computing technologies. Since 2021, with France officially joining SKAO, he is also getting strongly

involved in the French effort dedicated to the construction of this giant radio-telescope. In

particular, he is currently the inaugural head of ECLAT, a joint laboratory between CNRS, INRIA and

Atos/Eviden, as a long-term support structure federating resources from academic and industrial

teams that will engage in the R&D work for the French contribution to the SKA.